Is artificial intelligence, a technology aggressively advertised as the ultimate cure-all, fundamentally incompatible with transdisciplinarity and its decades-old insight that the “wicked” problems of the real world do not lend themselves to one-dimensional solutions? Should transdisciplinary research outright reject a technology that is already undermining efforts to achieve social and environmental justice? Or can artificial intelligence actually support transdisciplinary research when used responsibly?

Using artificial intelligence in transdisciplinary research requires a critical mindset

We believe that, used responsibly, artificial intelligence can support transdisciplinary research, but argue that the decision to use artificial intelligence as a tool in transdisciplinary research must be based on an acute awareness of the risks involved and a critical mindset.

Such a critical mindset entails, first, a deconstruction of the term “artificial intelligence”. This involves realizing that it is not rigorously defined and has always been little more than a marketing slogan. A critical look at the term also considers the fact that it casually refers to a concept that is not yet well understood scientifically, namely intelligence. Furthermore, the term “artificial” is misleading, since, as Kate Crawford (2021) shows, artificial intelligence relies heavily on human labor and the exploitation of natural resources.

Second, a critical approach to artificial intelligence rejects the prevalent notion of an artificial intelligence in the singular in current discourse. It understands that what is currently referred to as “artificial intelligence or AI” is actually based on a rather mundane technology called “machine learning,” which has a wide range of applications. We focus on generative artificial intelligence (also known as GenAI), the technology behind today’s most popular artificial intelligence applications, which produces, when prompted, new content that is similar to its training data. Framed as a general-purpose technology, generative artificial intelligence is arguably driving expectations of a wave of innovation in science and research.

Third, critically using current artificial intelligence in transdisciplinary research requires an awareness of its fundamental limitations. Large language models, the figureheads of generative artificial intelligence, are not truth machines that understand language and reason their way through problems as humans do. Instead, large language models are highly advanced forms of autocomplete that often produce uncannily sophisticated, but sometimes also laughably flawed, output.

Finally, a critical mindset adopts a human-centered approach to artificial intelligence. This means that artificial intelligence systems should augment or enhance human capabilities rather than replace them. It also implies that the output of generative artificial intelligence applications must be reviewed by competent humans before being used for public purposes. This perspective aligns well with Faye Miller’s recent inspiring i2Insights contribution, Five capacities for human–artificial intelligence collaboration in transdisciplinary research, about the skill sets transdisciplinary researchers need to develop to “orchestrate human–artificial intelligence collaboration.”

Which tasks in transdisciplinary research can be addressed with generative artificial intelligence?

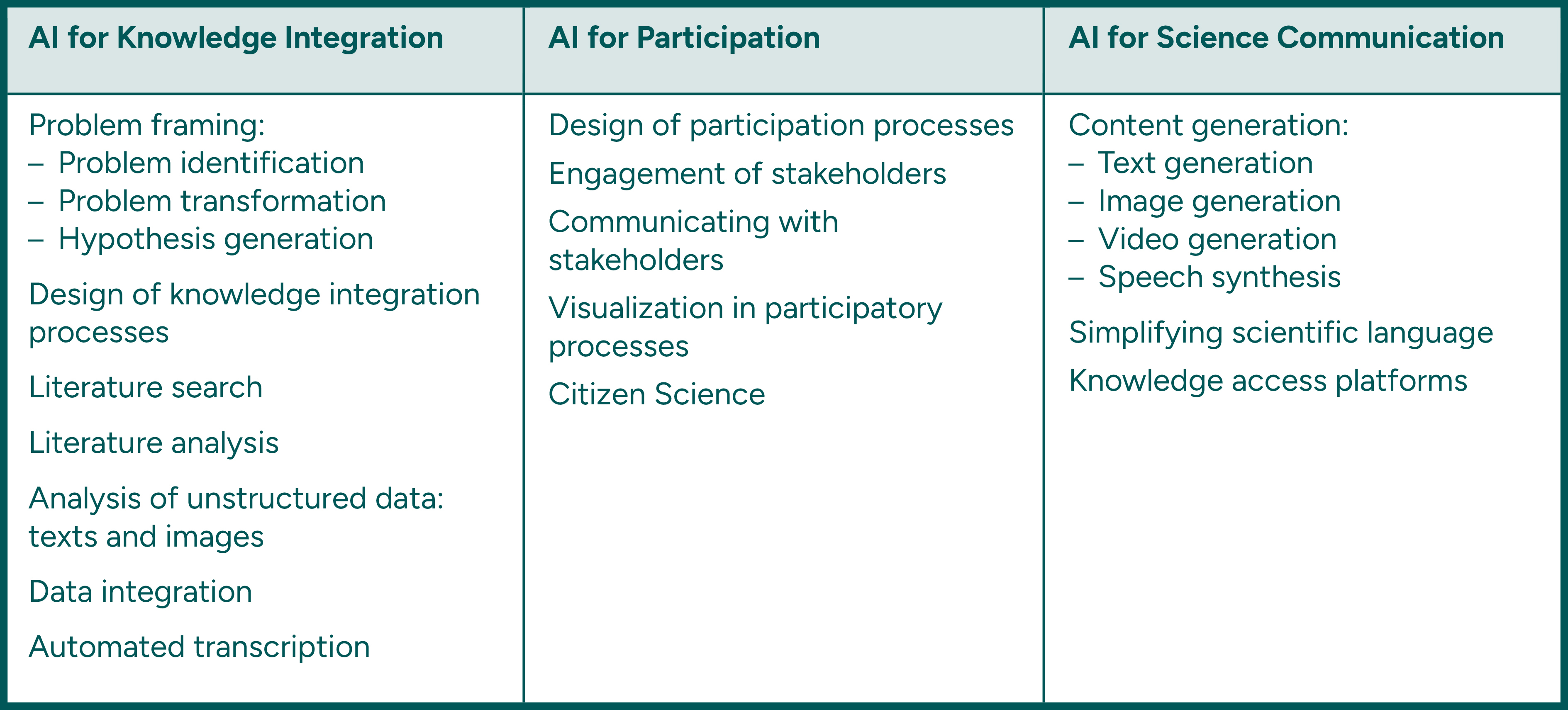

Adopting a critical mindset means understanding which artificial intelligence tools can be used for which tasks in transdisciplinary research, how to use them to leverage their potential while recognizing their inherent limitations, and how to identify and mitigate the risks they pose. In the table below, we highlight where the use of generative artificial intelligence is particularly relevant to transdisciplinary research: knowledge integration, stakeholder participation, and science communication. For each area, we identify key transdisciplinary research tasks amenable to artificial intelligence.

Autor*innen

-

Florian Keil

Florian Keil PhD is the founder of ai4ki, a start-up that develops custom artificial intelligence solutions for science and science management. -

![]()

Melina Stein

Melina Stein ist seit 2015 wissenschaftliche Mitarbeiterin am ISOE. -

![Prof. Dr. Flurina Schneider: Wissenschaftliche Geschäftsführerin des ISOE]()

Flurina Schneider

Zum ProfilFlurina Schneider ist wissenschaftliche Geschäftsführerin des ISOE und Professorin für Soziale Ökologie und Transdisziplinarität an der Goethe-Universität Frankfurt. Ihre Forschung beschäftigt sich mit Lernen und Handeln für Nachhaltigkeitstransformationen, transdisziplinärer Forschung und Wissenschaftspolitik.

Here we focus on one particular question: Can generative artificial intelligence help with knowledge integration? To answer this question, we distinguish between weak and strong knowledge integration. We define the latter as the cognitive process of combining different representations of knowledge about the world into a unified, coherent framework from which new insights can be generated. In contrast, weak knowledge integration is the process of extracting insights from the mere aggregation of existing knowledge.

Strong knowledge integration is so difficult because the many different representations of knowledge about the world that are relevant to a given problem are often incompatible in terms of epistemology and methodology, as well as in how they are codified and communicated. Integrating them involves an intricate act of reasoning, in which knowledge from one domain is interpreted and expressed in terms of another.

The “act of reasoning” involves several abilities: symbolic, common sense, analogical, and compositional reasoning. The last is particularly important because it involves the ability to recognize and understand novel relationships between known elements. However, evaluations of large language models show that they excel primarily at symbolic reasoning in tasks such as mathematics and coding, while struggling with other forms of reasoning in open-ended domains.

While experimentation with artificial intelligence around knowledge integration is warranted, we believe opportunities for using today’s artificial intelligence in transdisciplinary research pertain to tasks related to weak rather than strong knowledge integration.

Artificial intelligence research and development should learn from transdisciplinary theory and practice

Using artificial intelligence in transdisciplinary research with a critical mindset is an important starting point. As transdisciplinary researchers and practitioners we must, however, not stop here. Given how it is currently produced and deployed, any use of generative artificial intelligence contributes to various forms of severe social and environmental harm. While this realization might lead some to argue that the use of generative artificial intelligence (in transdisciplinary research) is principally unethical, we believe this conclusion is not inevitable.

On the contrary, we argue that, by productively engaging with artificial intelligence, the transdisciplinary community should help pave the way for its ethical use and development. As transdisciplinary practitioners and transdisciplinary institutions, one thing we can do is use open source artificial intelligence whenever possible and to advocate for a practice of openness that, crucially, includes the models’ training data.

Even more importantly, the transdisciplinary community should support initiatives that promote artificial intelligence in the public interest. Specifically, it should map out the argument that artificial intelligence research and development must be transdisciplinary or, otherwise, the benefits and burdens of the technology will continue to be shared unevenly.

What do you think? How have you used artificial intelligence in your work? Are there other advantages and cautions that you would add?

To find out more:

Keil, F and Stein, M. (2025). Opportunities and Risks of Using AI Technologies in Transdisciplinary Research. ISOE-Materialien Soziale Ökologie (ISOE Materials Social Ecology), 77. Institute for Social-Ecological Research (ISOE): Frankfurt am Main, Germany. (Online – open access) (DOI): https://doi.org/10.5281/zenodo.15535709

This report explains why and how generative artificial intelligence can help accomplish transdisciplinary research tasks and provides links to specific tools and resources for further learning. The report focuses on tools that do not require programming skills.

Reference:

Crawford, K. (2021). Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. Yale University Press: New Haven, USA and London, UK.

This blog post originally appeared in Integration and Implementation Insights (https://i2insights.org/2025/08/19/transdisciplinarity-and-artificial-intelligence/#more-43072) as “Transdisciplinary research with and for artificial intelligence” and is reposted with the authors’ permission.